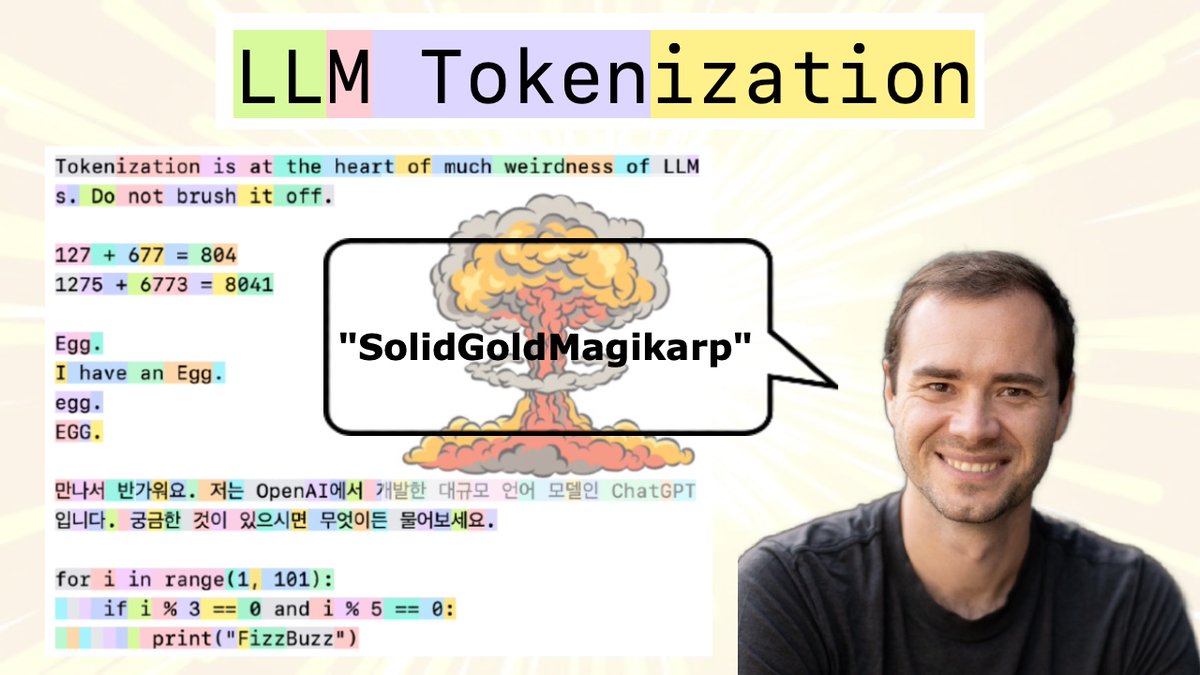

Tokenizer

> @karpathy: New (2h13m 😅) lecture: "Let's build the GPT Tokenizer"

>

> Tokenizers are a completely separate stage of the LLM pipeline: they have their own training set, training algorithm (Byte Pair Encoding), and after training implement two functions: encode() from strings to tokens, and…