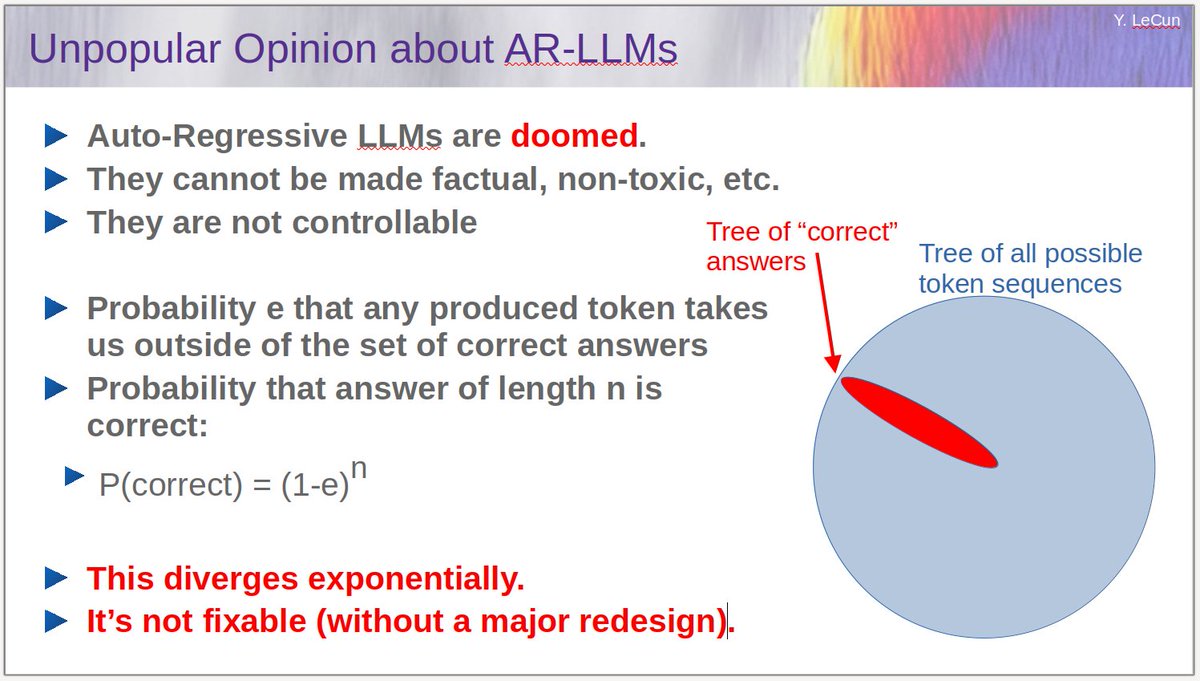

自己回帰モデルのLLMは必ず誤る

> @ylecun: I have claimed that Auto-Regressive LLMs are exponentially diverging diffusion processes.

> Here is the argument:

> Let e be the probability that any generated token exits the tree of "correct" answers.正しい答えのtreeから外れる確率

> Then the probability that an answer of length n is correct is (1-e)^n

長さnの回答が正しい確率は(1-e)^n

> 1/

> @ylecun: Errors accumulate.

> The proba of correctness decreases exponentially.

> One can mitigate the problem by making e smaller (through training) but one simply cannot eliminate the problem entirely.trainingを通じてeを小さくすることで問題を小さくできるが解決はできない

> A solution would require to make LLMs non auto-regressive while preserving their fluency.

LLMを流暢さを保持しながら自己回帰をやめることが必要

> @ylecun: The full slide deck is here.

> This was my introductory position statement to the philosophical debate

> “Do large language models need sensory grounding for meaning and understanding?”

> Which took place at NYU Friday evening.

>

> @ylecun: I should add that things like RLHF may reduce e but do not change the fact that token production is auto-regressive and subject to exponential divergence.

関連